Contrary to monocular cameras based on a single image sensor, multi-view systems display a set of connected and synchronized image sensors. Depending on how these sensors are arranged, such a system can cover several points of view of the same physical space. They can be either turned towards the inside (concave configuration), outward (convex) or arranged in the same plane in order to:

- Rebuild an image in super résolution

- View in stereoscopic or multiscopic relief the captured videos

- Recover a depth map

- Make the panoramic view of a scene

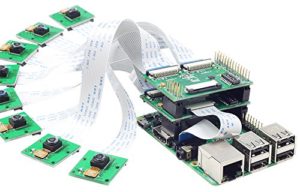

Aim of this thesis is to study and then to design a realistic demonstrator of an intelligent camera with 9 image sensors. The main difference from the existing (mostly commercial) systems is the use of an FPGA/SOC-based processing core. This one would be used to compute the scene geometry, to make image processing algorithms while ensuring perfect synchronization.

Exemple of Arducam-based Multiview camera

In a first step, such a system will be modeled on Matlab to define the processing chain and to quantify the calculation needs that will have to be embedded on the FPGA for the super resolution scheme. This same channel will be introduced in an FPGA via a step of Codesign SoC /VHDL to finally design the electronics of the 9 cameras / core processing.

This work is divided into two distinct areas, namely the design of original electronic systems and artificial vision in a context not yet explored to the best of our knowledge. In addition, the design and development of such an unrestricted multi-view camera in space provides undeniable added value compared to most commercial systems.

Padawan DREAM